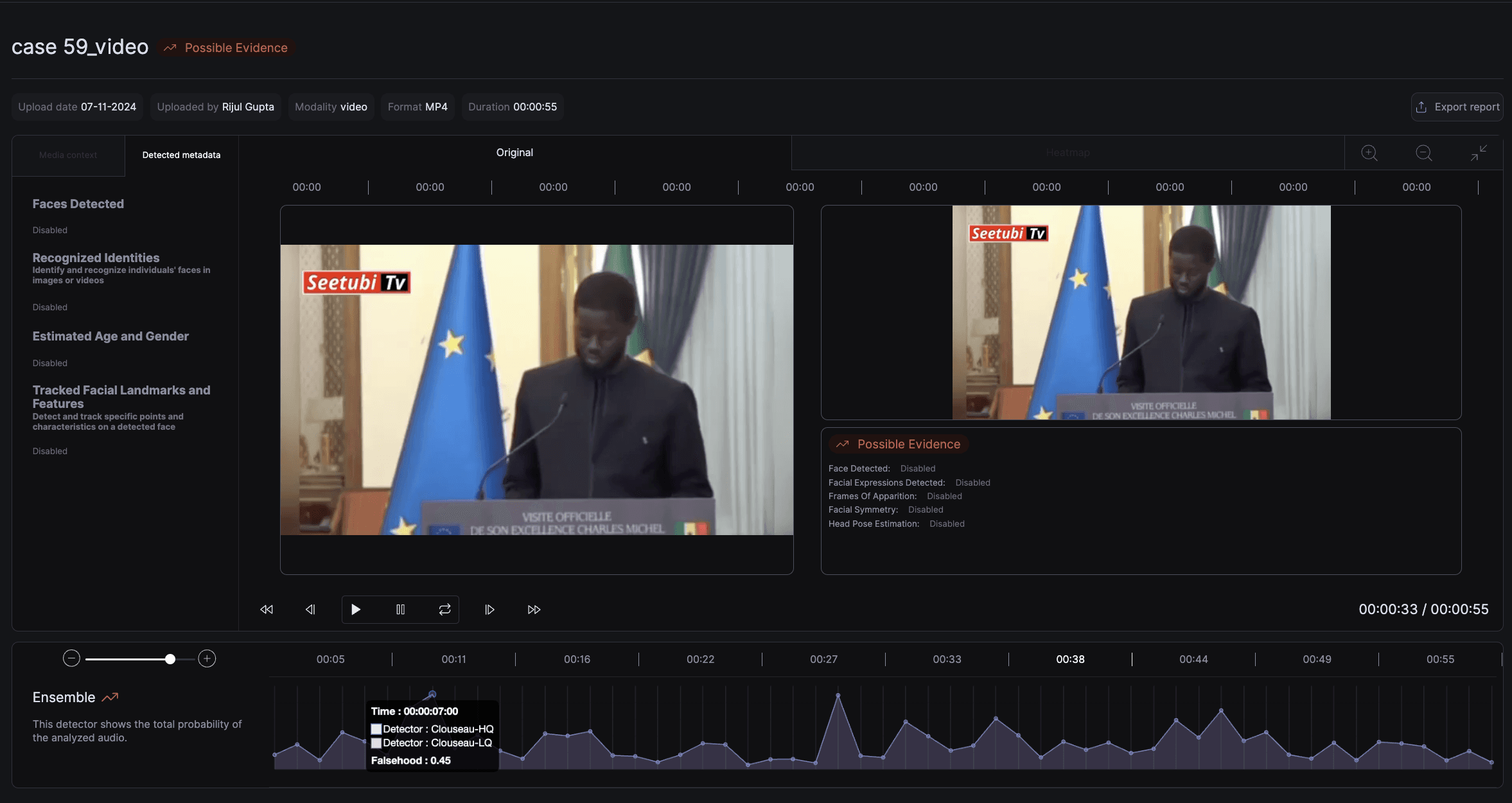

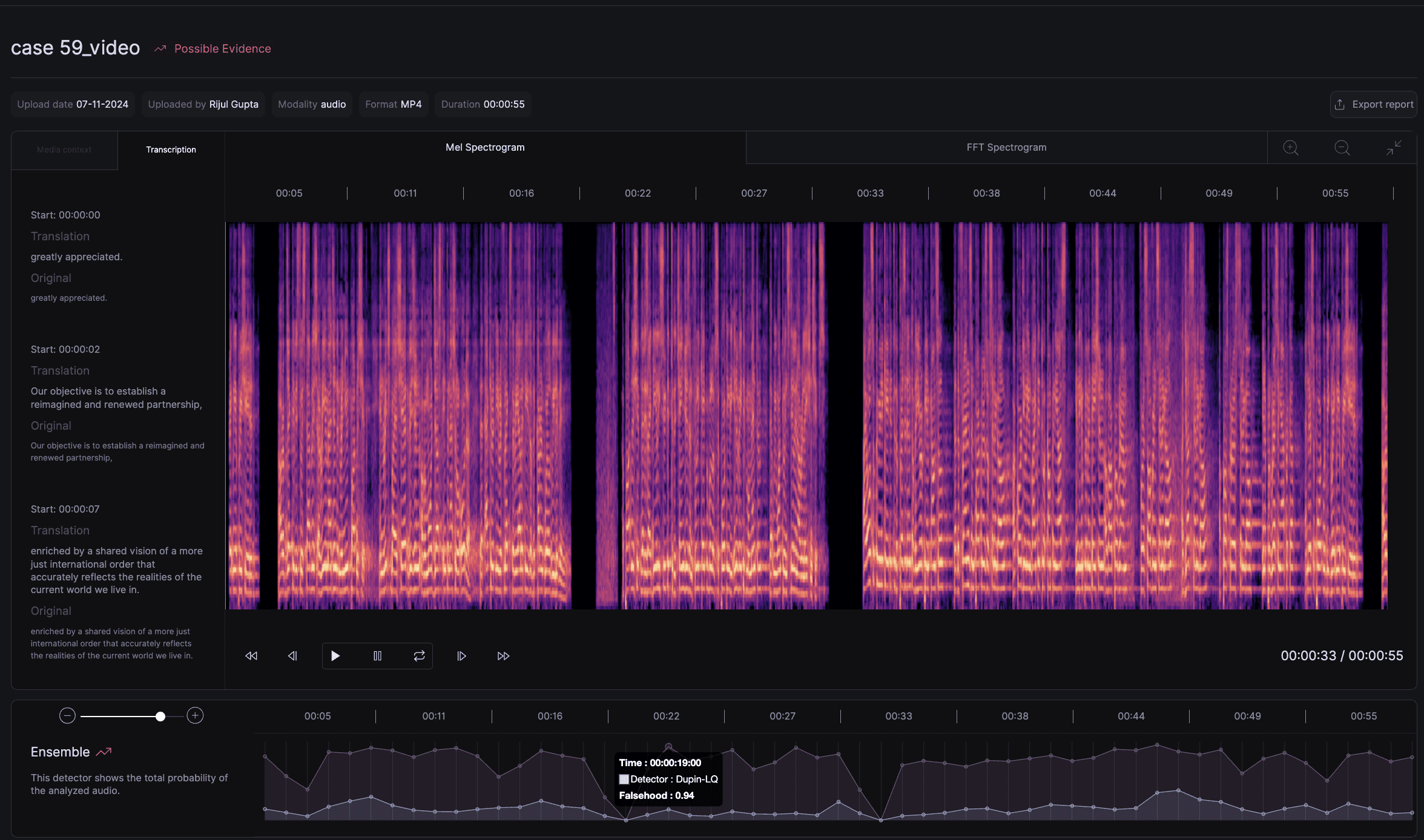

Deepfake Detection Report:

President of Senegal, Bassirou Diomaye Faye

Published Nov 7, 2024

Date surfaced

1 November 2024

Source

X

Modality

Face

Audio

Video

Deep Media’s Deepfake Detection Confidence

Evidence of Generative AI

Potential to Spread

Medium

Comments

Our analysis of a video portraying the President of Senegal speaking English reveals significant signs of manipulation, with a medium potential to spread. Key indicators include unusual face movements at 00:34, resembling Wav2Lip artifacts or possibly DCT compression, and unexplained audio cuts around 00:17, both of which suggest the use of Generative AI. The voice clone in particular is notably convincing, with an accurate match to the President’s tone and cadence, yet delivered at an unusually slow pace without typical podium speech emotion.

While this content may not strongly sway public opinion, it introduces a risk of confusion and mistrust in media authenticity. This underscores the importance of labeling AI-manipulated media clearly to maintain transparency and protect public trust. As AI advancements make these simulations increasingly sophisticated, proactive identification and labeling are essential to uphold media integrity and public perception.