Welcome back to "This Week in AI," where we explore the most significant developments and thought-provoking discussions in the world of artificial intelligence. This week, we'll delve into three crucial topics: the controversy surrounding the power and range of X’s Grok, OpenAI's groundbreaking partnership with Vogue publisher Condé Nast, and the US Army's increasing interest in AI technology. As we navigate these complex issues, it's as important as ever to examine the technical aspects while also bringing to the forefront the broader implications of these advancements both for the future of society, and of artificial intelligence.

Grok: The Uncharted Territory of Unregulated AI

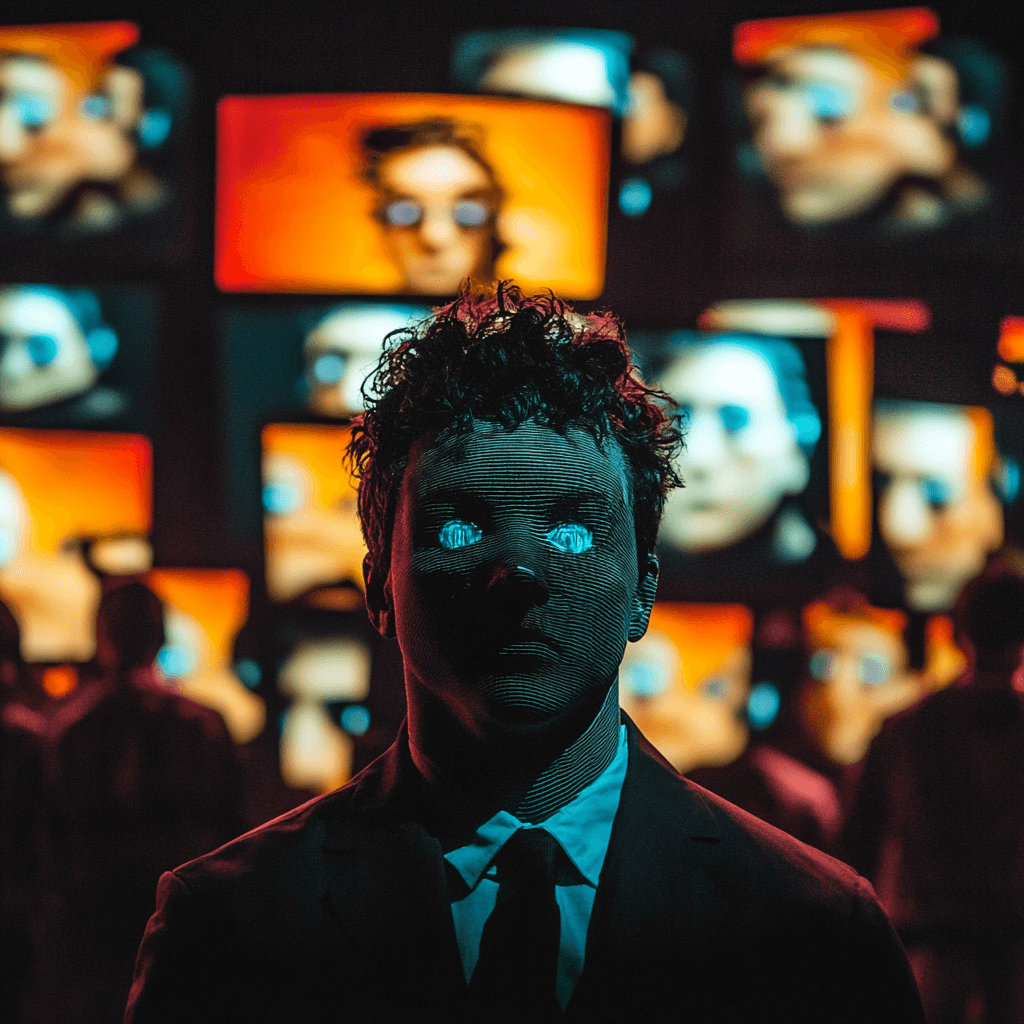

First, let's address perhaps the most publicly discussed innovation of the week: X's chatbot Grok's new AI image generation feature, powered by Black Forest Labs' FLUX.1 model. FLUX.1 enables Grok-2 to generate highly detailed and realistic images, particularly when it comes to creating images of people, by employing advanced techniques such as progressive growing of GANs and style-based synthesis. The model's hierarchical architecture allows it to capture fine-grained details and nuances, resulting in strikingly realistic images that often surpass the quality of those generated by other AI image generators.

However, the seamless integration of FLUX.1 into Grok-2, which follows a similar pattern to other AI image generation systems, has raised concerns about the potential risks and ethical considerations associated with generating highly realistic images without sufficient safeguards in place. Users have noted that Grok-2 seems to have fewer guardrails compared to other AI image generators, leading to the generation of disturbing and controversial content.

In recent days, users have reported generating highly realistic images of graphic violence, pornography, and other explicit content using Grok-2's image generation feature. The ease with which these images can be created and shared has sparked a heated debate about the responsibilities of AI companies in preventing the misuse of their technologies. Critics argue that the lack of proper safeguards and content moderation in Grok-2 could lead to the proliferation of harmful and offensive content, with potential consequences for individuals and society as a whole.

As the field of AI image generation continues to evolve rapidly, it is crucial to engage in open and thoughtful discussions about the ethical considerations and potential consequences of these technologies. Researchers, policymakers, and industry leaders must collaborate to establish guidelines and best practices that promote the responsible use of AI while harnessing its potential to drive innovation and creativity. This includes implementing robust content moderation systems, establishing clear guidelines for appropriate use, and educating users about the potential risks and limitations of AI-generated content.

The controversy surrounding Grok-2's image generation feature serves as a stark reminder of the need for responsible development and deployment practices in the field of AI. As we push the boundaries of what is possible with these technologies, we must remain vigilant in addressing the ethical implications and potential consequences of their use. Only by fostering a culture of responsibility and accountability can we ensure that the benefits of AI are realized while mitigating the risks and negative impacts on society.

OpenAI's Pioneering Partnership with Condé Nast

Next, let's explore the groundbreaking partnership between OpenAI and Condé Nast, the renowned publisher of Vogue magazine. This collaboration aims to harness the power of generative AI to revolutionize the fashion and media industries, paving the way for personalized content creation and enhanced creative processes.

From a technical standpoint, the integration of OpenAI's cutting-edge language models and generative algorithms with Condé Nast's vast repository of fashion and media data could yield remarkable results, and the potential applications of this technology are vast. For instance, the AI system could generate personalized fashion recommendations based on an individual's preferences, body type, and occasion. By analyzing a user's browsing history, social media activity, and other data points, the AI could create tailored content that resonates with their unique style and interests.

Moreover, the AI could be used to create dynamic and interactive content experiences. Imagine a virtual fashion assistant that can answer questions, provide styling tips, and even generate visualizations of how an outfit might look on a particular individual. Or consider an AI-powered media platform that adapts its content in real-time based on user engagement and feedback, ensuring that each reader receives a personalized and engaging experience.

However, it is important to consider not only if this technology can be utilized in this way, but should it be used in this manner. Do you really need your fashion bot to brose your social media activity, or know all sorts of details about your body type?

Additionally, the role of human creativity and expertise in the fashion and media industries must not be overlooked. While AI can assist and augment human efforts, it should not replace the unique perspective and intuition that human creators bring to the table. Finding the right balance between AI-generated content and human input will be key to leveraging the benefits of this technology while preserving the essence of creative industries.

The US Army's AI-Powered Future

Lastly, let's examine the US Army's growing interest in AI technology for military applications. As the Army invests in the development of AI-powered systems for various purposes, such as predictive maintenance, situational awareness, and decision support, it is essential to consider the technical aspects and the ethical implications of these advancements.

From a technical perspective, the integration of AI in military systems could lead to significant improvements in efficiency, accuracy, and real-time decision-making. By leveraging advanced machine learning algorithms, deep learning models, and computer vision techniques, AI-powered systems could enhance the Army's capabilities in areas such as object detection, threat assessment, and resource allocation.

However, the use of AI in military contexts also raises complex ethical questions, particularly concerning autonomous weapons systems and the potential for unintended consequences. As the US Army explores the possibilities of AI, it is crucial to engage in open and transparent discussions about the responsible development and deployment of these technologies, ensuring that they align with ethical principles and international laws.

Conclusion

This week's developments in AI highlight the incredible potential and the profound challenges that lie ahead. From the unregulated landscape of Grok to the pioneering partnership between OpenAI and Condé Nast, and the ethical complexities of military AI applications, we are witnessing a transformative era in the history of artificial intelligence.

As we navigate this rapidly evolving landscape, it is imperative that we approach these advancements with a balance of enthusiasm and caution. By fostering collaboration among stakeholders, establishing robust regulatory frameworks, and prioritizing ethical considerations, we can work towards a future where AI benefits society as a whole.

As we continue to push the boundaries of what's possible with AI, let us remain committed to responsible innovation, transparency, and the pursuit of knowledge that serves the greater good. Until next time, stay curious, stay informed, and let’s all keep changing the world.

Want to Learn How We Can Protect Your Business?

Receive detailed insights on our deepfake detection technology straight to your inbox.

By submitting this form, I confirm that I have read and understood the

Share this article

Next report